Using Natural Language for Creative Coding

A series of experiments

This project addresses growing concerns that AI is replacing human creativity by exploring how it can instead act as a creative partner. Each experiment begins with natural language prompts that shape visual sketches through mood, metaphor, and behavior rather than direct code, preserving human intuition while leveraging computational possibilities.

Using tools like Processing, p5.js, and Python, the work demonstrates AI's potential to amplify rather than automate creativity, resulting in a collection of small systems that co-create alongside human expression.

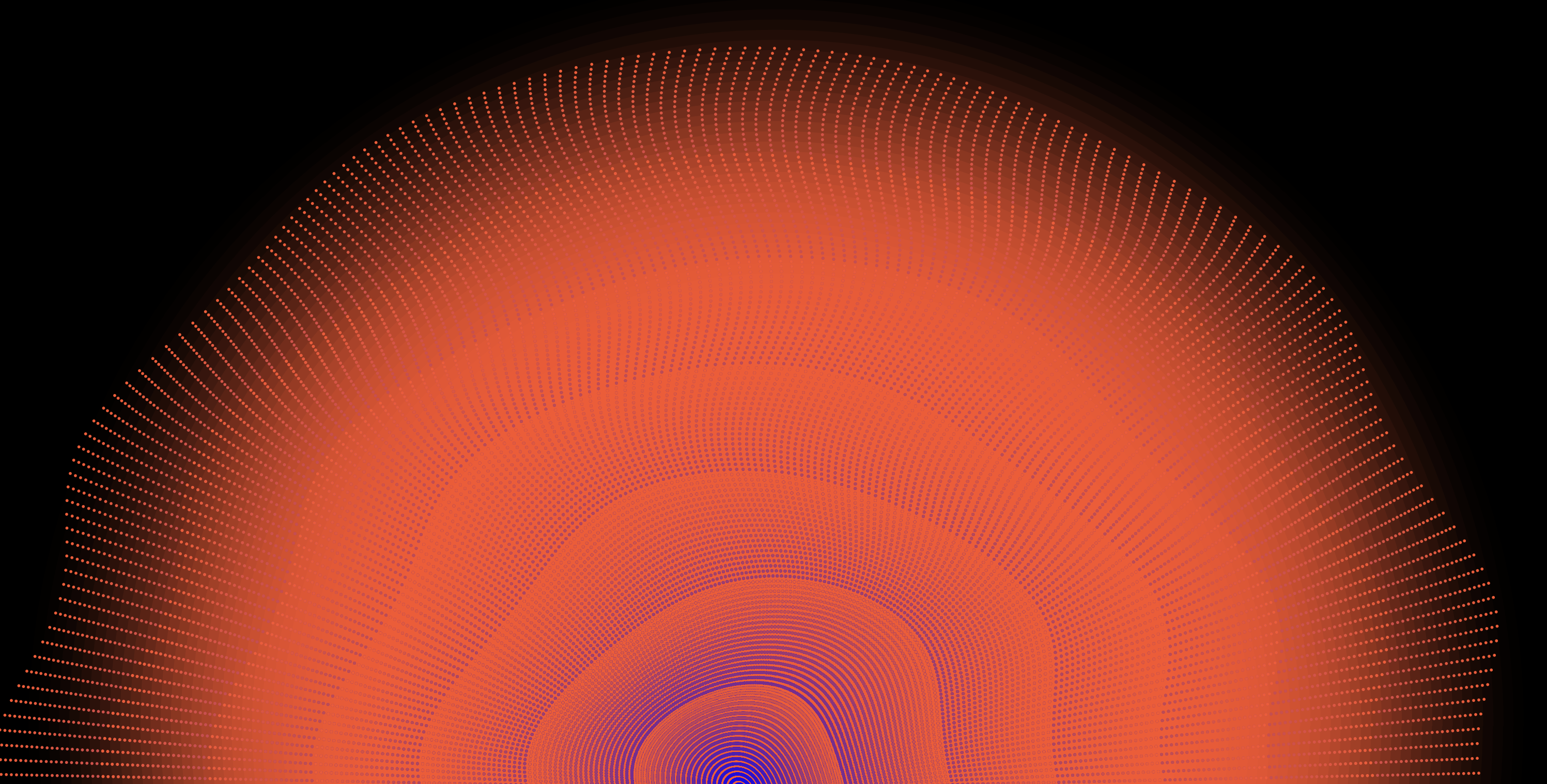

Experiment 01: Deep Space Ripple

Visual + Audio Sketch

Overview

A meditative, eerie sketch inspired by 2001: A Space Odyssey.

Features

- Pulsing radial gradient

- Rippling dot arches, centered

- Shared motion logic between visuals and sound

- Ambient soundscape with drones and glitches

- Prompt Language: Processing (Java)

Reflections

Demonstrates how fast and fluid generative design can be when driven by natural language.

Preview of Sketch

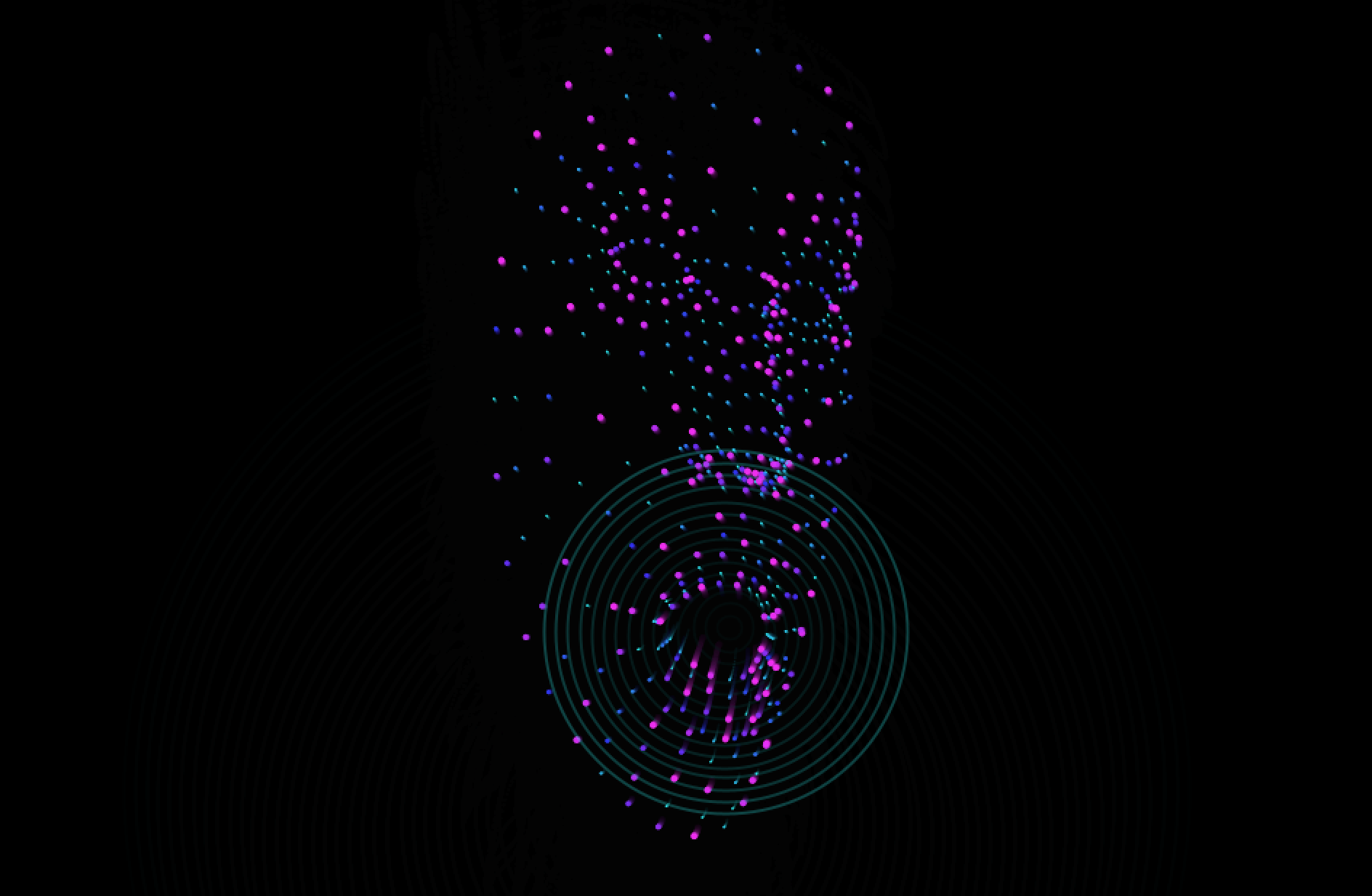

Experiment 02: Face Ripple

Visual + Audio Sketch

Overview

An interactive browser-based sketch where the user’s face becomes a constellation of glowing particles. Triggered by mouth movement, the face ripples outward like a soft explosion, accompanied by sound. Inspired by futuristic UI, somatic interfaces, and the tension between biological motion and digital effects.

Features

- Real-time face tracking using Python + MediaPipe

- OSC (Open Sound Control) communication to Processing

- Particles mapped to 468 facial landmarks

- Breathing motion and parallax drift

- Ripple ring effect triggered by mouth opening

- Overlapping sound bursts with smooth fade

- Prompt Language: Processing (Java) & Python

Reflections

This sketch explores how digital representations of the body can feel both intimate and alien. Using natural gestures to trigger generative effects allowed me to experiment with presence and absence, focusing on moments when the face builds up or dissolves.

Preview of Sketch

This experiment may take a moment to load. Please allow webcam access when prompted.

Experiment 03: Abstract

Visual

Overview

Generating abstract forms through parametric experimentation with natural language coding in Processing. The sketches explore adjustable parameters such as abstraction intensity, point density, color palettes, and spatial relationships. Emphasizing controlled abstraction, each composition was designed for flexibility, enabling precise adjustments and iterative refinements to achieve desired visual outcomes.

Features

- Generative abstraction of point clouds and line structures

- Parametric control of warp intensity, bloom strength, and echo delay

- Polar coordinate transformations for radial distortions

- Time-based echo effects layering past frames into motion trails

- Dynamic point density adjustment and distance-based interactions

- Organic glass-like textures through overlapping transparency

- Prompt Language: Processing (Java)

Reflections

By using natural language prompts and example images, I was able to consistently generate abstract forms without being slowed by technical barriers. This approach allowed me to maintain focus on the creative exploration of form, rather than getting caught in the mechanics of coding.

Preview of Sketch

Experiment 04: Sound Visualizer

Visual + Sound + Interaction

Overview

This project started as an audio-reactive visual experiment in Processing, using natural language prompts to guide the design process. Over time, it evolved into a web app that combines sound visualization with interactive features like recording and session playback, powered by real-time audio analysis through the Web Audio API and rendered dynamically with p5.js.

Features

- Generative audio-reactive orb visual (p5.js & Perlin Noise).

- Web Audio API analysis mapping Amplitude, Bass, Mid, Treble to visuals.

- FFT Spectrum display with low-frequency adjustments.

- Microphone recording via MediaRecorder API (saving as download).

- Single dynamic Record/Stop toggle button UI.

- Development Process: Processing, HTML/CSS/JS/p5/Web Audio

Reflections

I began by exploring whether audio input could drive an artistic experience. What started as an experimental project gradually shifted focus toward adapting the concept into a user-friendly web app with functional applications.

Preview of Sketch